11 Best Practices For Effective A/B and Adaptive Testing

Written by

Do you ever stress over whether your prospects prefer the blue call-to-action background or the green one, or maybe that the word "FREE!" is off-putting to your target?

There are many small choices regarding content offers and how they're promoted that can affect success. They often aren’t given statistical significance, yet each choice could realistically sway a prospect's decision to either convert or go to a competitor.

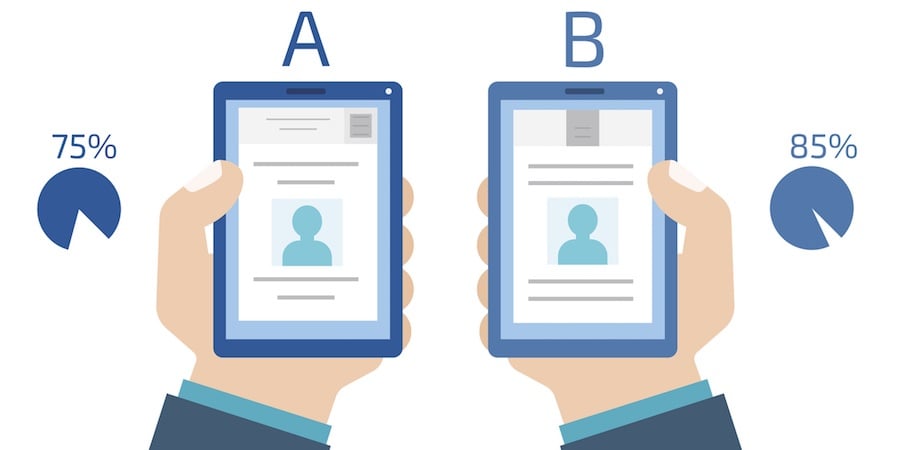

Conducting A/B tests — which present different variations of the same offers to your audience — helps quantify what appeals to your prospects. In turn, using what you learn improves your conversion rates especially when you follow these tried-and-true A/B testing best practices and testing tips.

A/B Testing Best Practices

- Identify what to test

- Create “A” and “B” versions

- Don't rely on assumptions

- Determine what to measure

- Define KPI measurement methods

- Set up your A/B test

- Run your A/B test

- Analyze the results

- Replicate the results

- Apply the results

- Test again

Identify what to test

There is no shortage of website elements that you could A/B test (font, size, shape, colors, image, wording, placement, etc.) on your site pages, landing pages, forms, chatbots and CTAs, but there’s rarely time to test them all.

You need to concentrate your efforts on pages with the most impact — specifically those with high traffic, or those with decent traffic but underperforming conversion rates. These elements are ideal for testing:

- Headlines and subheads

- Landing page meta descriptions to influence click throughs

- Call-to-Action (CTA) copy, colors, and placement

- Image and video placement and its impact on page engagement

Even as you narrow your list of elements, don’t just dive into testing. Test only one element at a time, or you won't understand what triggered the conversion.

RELATED: 10 Landing Page Guidelines to Maximize Conversions & Improve Inbound Results

Create “A” and “B” versions (and “C,” “D,” and “E” versions, if necessary!)

After deciding what single element to test, create variations — as few as two and up to several variations of a headline, landing page, CTA copy, or supporting imagery.

For instance, consider these wording options for a form or CTA button:

- Download Now

- Learn More

- Get the eBook Now

- Get Your FREE Copy

- Submit

- Yes, I Want This!

If you are using an adaptive test (a robust testing tool in HubSpot CMS Hub Enterprise), you can test multiple variations, up to a total of five. HubSpot will dynamically determine the best-performing variation and send more traffic to it over time to increase conversions.

Don’t rely on assumptions

When you conduct a test, don’t assume that you know what will resonate with prospects unless you have recent statistics to back it up. You may think that “Download Now” is the ideal CTA button copy, when in fact “Read it Now” is a better option based on your data, because a lot of that traffic comes from mobile devices (where users shy on storage are loath to download something).

Don’t shortcut the process and jump to conclusions about prospects’ reactions. Instead, review the analytics!

Determine what to measure

If you’re not clear what you’re trying to improve, no amount of A/B testing will be helpful.

Understanding how you measure success is critical. For example, if you’re changing a landing page to use a chatbot instead of a form, hypothesize and document estimates for which mechanism will result in the highest conversion rate. Write down the actual results.

Define KPI measurement methods

Once you know what you want to measure, determine how you’re going to measure it.

If you have a website platform that provides analytics, such as HubSpot or Squarespace, it may already be set up to gather the data you need. If not, Google Analytics, Google Tag Manager, HotJar's funnel tracker, and numerous other tools are available to track specific metric goals against your objectives.

Be very specific about how you’re going to measure your key performance indicators (KPIs) so there’s no ambiguity in the data.

Results can also be skewed. Filter out data generated by people in your organization who are clicking on CTAs. This is less important for websites or landing pages with lots of visits, as your internal percentage will be low, but for more niche products and services it’s extremely important.

Set up your A/B test

Once you have your test parameter variables determined, set up your tests. There are many platforms you can run the tests with, including:

- HubSpot CMS Hub Professional or Enterprise

- Optimizely

- Google Optimize

Regardless of platform, you’ll need a control and a challenger. The control is the page or CTA you already have live; the challenger is the version with your new/different copy, image, or other element. Your chosen testing or marketing automation platform will segment your audience randomly between the control and the challenger.

Run your A/B test

To effectively run the A/B test, determine a set length for the first test period.You might limit the test to a week, or maybe 1,000 page views. The choice is yours. The important thing is to run the A/B test long enough to truly see a pattern develop.

Your testing platform will randomly send website visitors to your control and challenger pages even though they all click on the same link. Having both pages live simultaneously allows for changes in audience and time periods.

Analyze the results

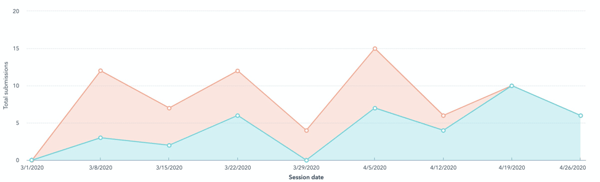

Once the test runs for the allotted period, analyze the data based on the metrics of success you defined earlier. Which CTA button text actually got the most clicks? Which graphics drew the greater number of conversions on your content piece?

You may be surprised at the results, which could well prove your hypothesis wrong. Maybe the variation you thought would drive more results actually caused your results to drop, or vice versa. Either way, you walk away from A/B testing better informed about your prospects than before you started.

Replicate the results

A scientific result is only true if it is repeatable. Your initial results may be because of an external factor or simply noise in the signal.

If you can reliably recreate the test results, your confidence level in its accuracy increases over time.

Apply the results

Once you have the results, implement what you learned throughout your website and content promotion strategy. If you know that certain text leads to more conversions, change the rest of your CTAs to reflect this.

Too often we test something but then do nothing with the results.

Test again

You had an idea. You tested it. You got results that verified the accuracy (or inaccuracy) of your hypothesis.

Great — now test another element to see if you can boost results even more!

A/B testing is kind of like compounded interest: every little bit adds up on top of the other little bits. By constantly testing and iterating on your results, you’ll have a far better long-term return on your content.

A/B testing is a proven tool for conversion rate optimization and is an effective part of an overall iterative continuous improvements strategy. Learn more in our guide, The Growth-Driven Design (GDD) Methodology.

Subscribe To Our Blog

Information. Insights. Ideas. Get notified every time a new Weidert Group blog article is published – subscribe now!

You May Also Like...

Search Engine Optimization

Optimize Your Industrial Website for AI Search

Marketing Technology

Why Unified Data Efforts Fail (and How Manufacturers Can Fix It)

Search Engine Optimization

How Falcon Rebuilt Industrial AI Search Visibility in 2025

Accelerate Your Growth with

Weidert Group

If you’re ready to explore a partnership, request a personalized consultation with our team.